WHY DOES SEXUAL REPRESSION EXIST?

/ |

| Egon Schiele, 1917 |

|

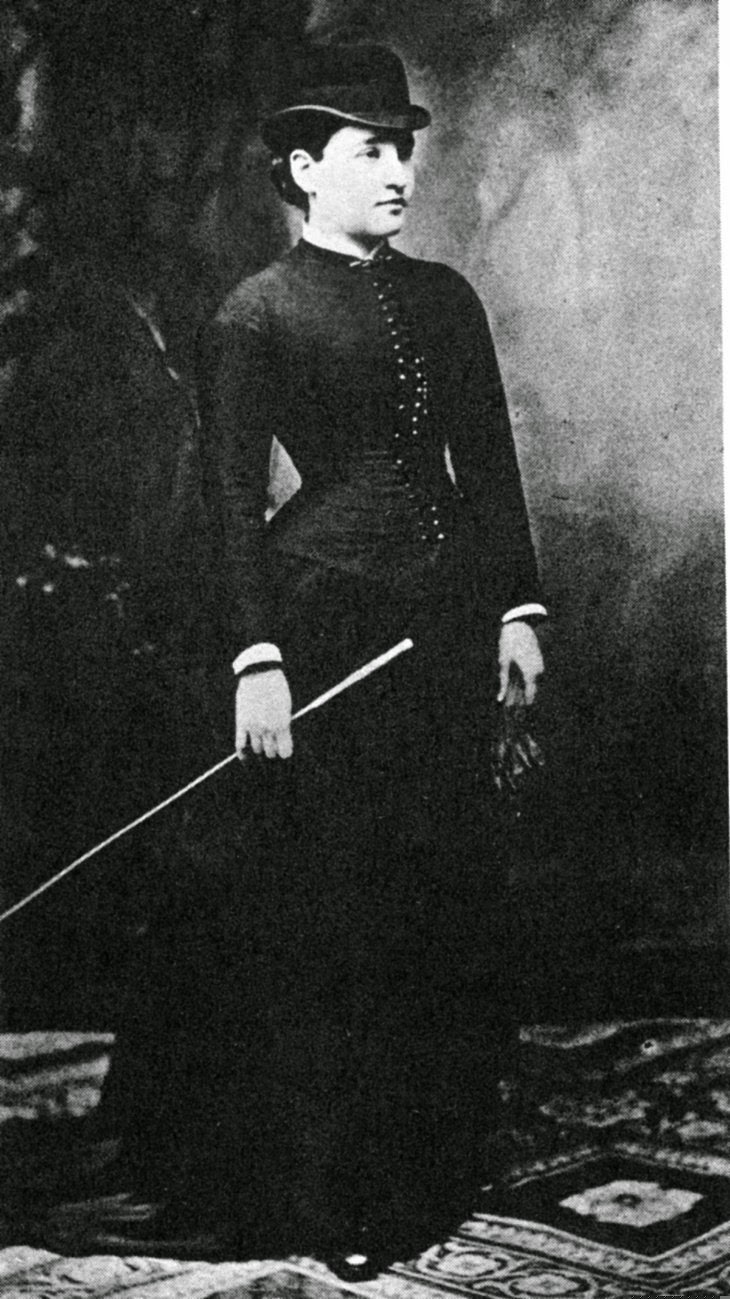

| Freud’s first patient cured, Anna O. |

|

| sexual ranking at IMF meeting |

|

| jewelry ad, 2006 |

|

| luggage ad, 2006 |

|

| Egon Schiele, 1917 |

|

| Freud’s first patient cured, Anna O. |

|

| sexual ranking at IMF meeting |

|

| jewelry ad, 2006 |

|

| luggage ad, 2006 |

| |

| The True Roman Self-Image of their Heroic Past: Oath of the Horatii |

|

| Minsk demonstration 2006 |

|

| Katmandu 2006 |

|

| Jerusalem stalemate 2000 |

|

| Mt. Temptation, traditionally where Jesus spent 40 days in wilderness |

|

| Greek hoplite battle |

|

|

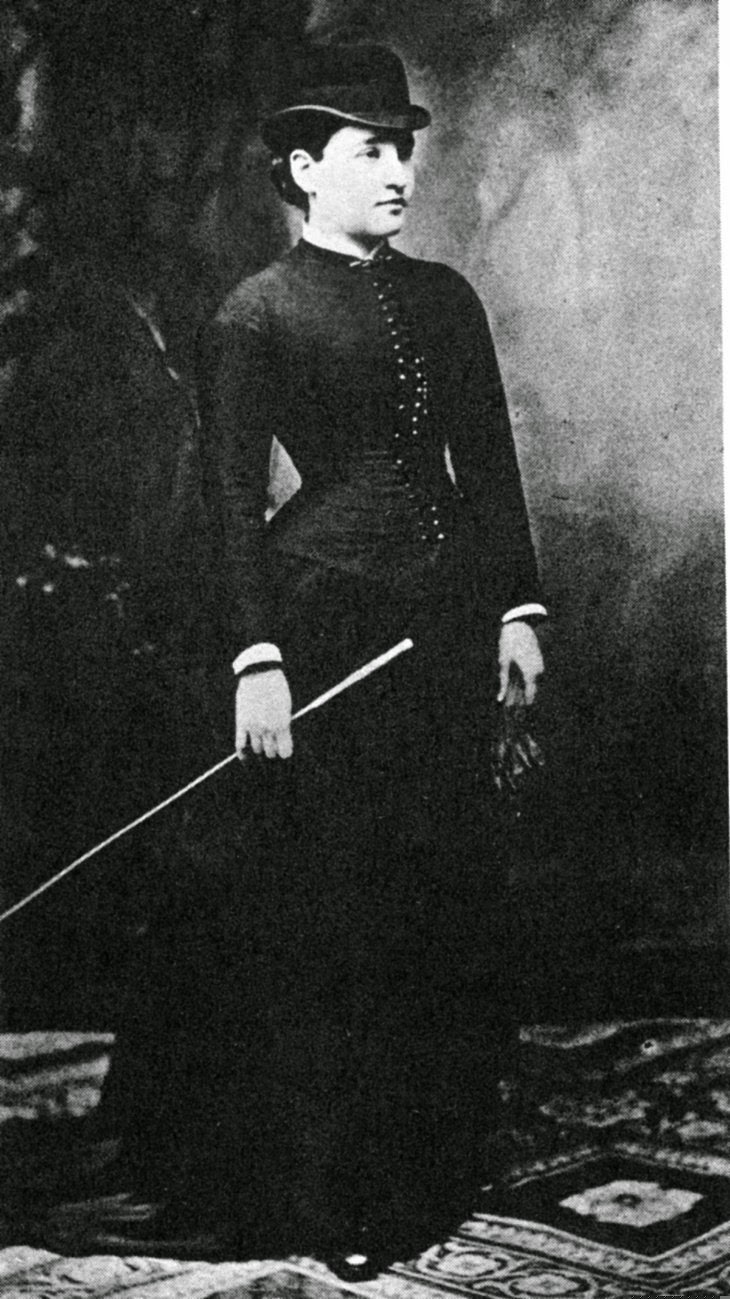

| Alexander's expedition 334-324 BC |

|

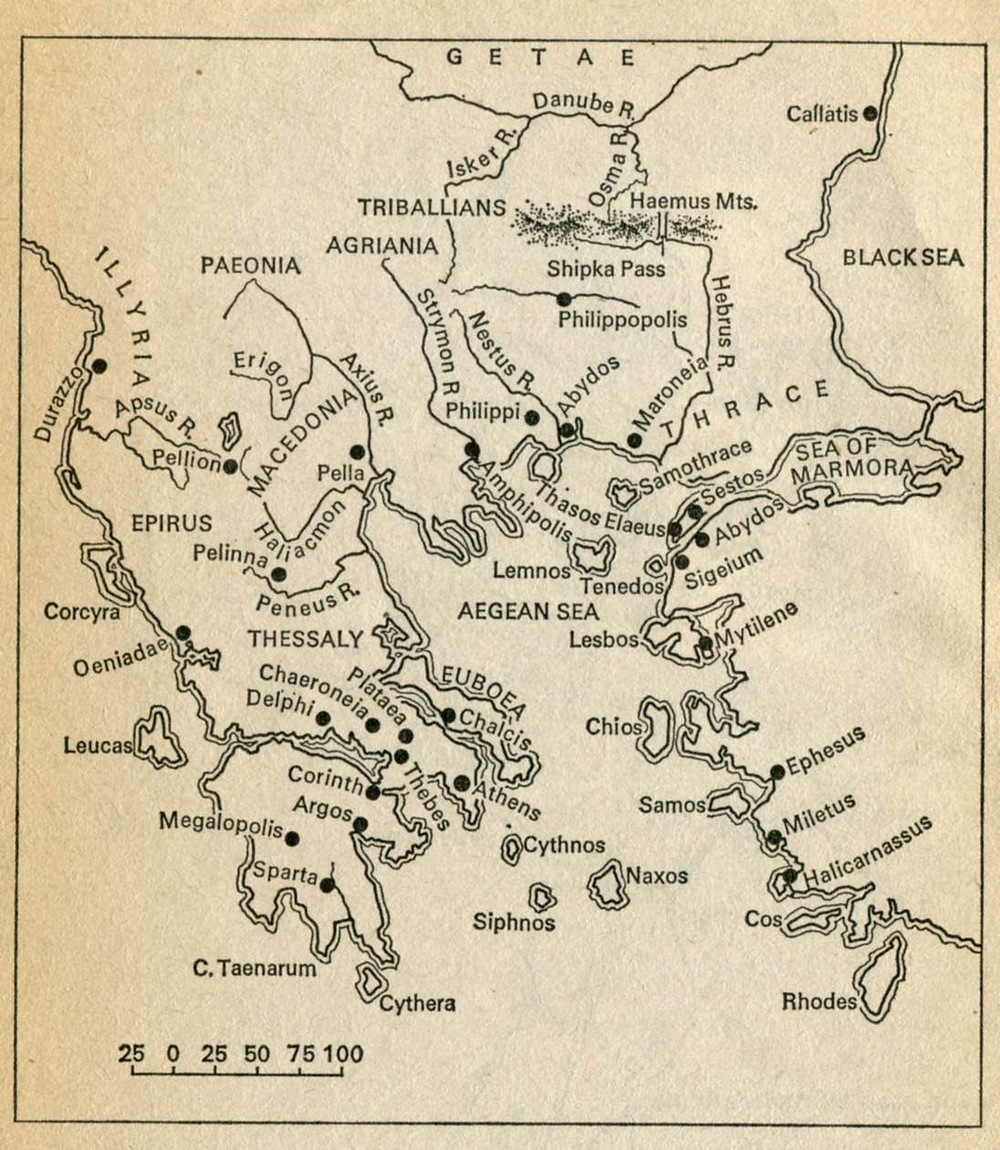

| Cross-section of mountain barriers to Iranian plateau |

|

|

Copy of a statue of Alexander regarded as

good likeness

|

|

| Napoleon 1803 (age 34) |

|

| Napoleon 1796, General of the Army of Italy (age 27) |

|

|

| Napoleon 1796 (age 27) |

|

| Napoleon 1799 (age 30) |

|

| Napoleon 1812 (age 43) |

|

Napoleon in exile at St.

Helena, 1819 (age 49)

|

|

Napoleon on his death bed, 1821

(age 51)

|

What happens, then, when more and more of our interaction takes place at a distance, mediated by mobile phones, text messages, computer posts to a network of perhaps thousands of persons? When interaction is mediated rather than face-to-face, the bodily component of IRs is missing. In the history of social life up until recently, IRs have been the source of solidarity, symbolic values, moral standards, and emotional enthusiasm (what IR theory calls Emotional Energy, EE). Without bodily assembly to set off the process of building IRs, what can happen in a mediated, disembodied world?

There are at least 3 possibilities. First, new kinds of IRs may be created, with new forms of solidarity, symbolism, and morality. In this case, we would need an entirely new theory. Second, IRs fail; solidarity and the other outcomes of IRs disappear in a wholly mediated world. Third, IRs continue to be carried out over distance media, but their effects are weaker; collective effervescence never rises to very high levels; and solidarity, commitment to symbolism, and other consequences continue to exist but at a weakened level.

Empirical research is now taking up these questions. The answer that is emerging seems to be the third alternative: it is possible to achieve solidarity through media communications, but it is weaker than bodily, face-to-face interaction. I argued in Interaction Ritual Chains, chapter 2 [2004] that mediated communications that already existed during the 20th century-- such as telephones-- did not replace IRs. Although it has been possible to talk to your friends and lovers over the phone, that did not replace meeting them; a phone call does not substitute for a kiss; and telephone sex services are an adjunct to masturbation, not a substitute for intercourse. When meaningful ceremonies are carried out-- such as a wedding or funeral-- people still assemble bodily, even though the technology exists to attend by phone-plus-video hookup. Research now under way on conference calls indicates that although organizational meetings can be done conveniently by telephone, nevertheless most participants prefer a face-to-face meeting, because both the solidarity and the political maneuvering are done better when people are bodily present.

The pattern turns out to be that mediated connections supplement face-to-face encounters. Rich Ling, in New Tech, New Ties [MIT Press, 2008] shows that mobile phone users talk most frequently with persons whom they also see personally; mobile phones increase the amount of contact in a bodily network that already exists. We have no good evidence for alternative number two-- solidarity disappearing in a solely mediated world-- because it appears that hardly anyone communicates entirely by distance media, lacking embodied contact. It may be that such a person would be debilitated, as we know that physical contact is good for health and emotional support. The comparative research still needs to be been done, looking at the amount of both mediated and bodily contact that people have; moreover, such research would have to measure how successful the IRs are which take place, in terms of their amount of mutual focus, shared emotion, and rhythmic coordination. Face-to-face encounters can fail as well as succeed; so we should not expect that failed face-to-face IRs are superior to mediated interactions in producing solidarity, commitment to symbols, morality, and EE.

Compare now different kinds of personal media: voice (phones); textual; multi-media (combination of text and images). Voice media in real time allow for some aspects of a successful IR, such as rhythmic coordination of speaking; voice messages, on the other hand, because there is no rapid flow of back-and-forth, should produce less solidarity. There is even less rhythmic coordination in exchanging messages by text; even if one answers quickly, this is far from the level of micro-rhythms that is found in mutually attuned speech, taking place at the level of tenths of seconds and even more fine-grained micro-frequencies of voice tones which produce the felt bodily and emotional experiences of talk. Adding visual images does not necessarily increase the micro-coordination; still photographs do not convey bodily alignments in real time, and in fact often depict a very different moment than the one taking place during the communication; they are more in the nature of image-manipulation than spontaneous mutual orientation. Real time video plus voice is closest to a real IR, and should be expected to produce higher results on the outcome side (solidarity, etc.), although this remains to be tested.

Many people, especially youth, spend many hours a day on mediated communication. Is this evidence that mediated interactions are successful IRs, or a substitute for them? I suggest a different hypothesis: since mediated IRs are weaker than bodily face-to-face IRs, people who have relatively few embodied IRs try to increase the frequency of mediated IRs to make up for them. Some people spend a great deal of time checking their email, even apart from what is necessary for work; some spend much time posting and reading posts on social network media. I suggest that this is like an addiction; specifically, the type of drug addiction which produces “tolerance,” where the effect of the drug weakens with habituation, so that the addict needs to take larger and larger dosages to get the pleasurable effect. To state this more clearly: mediated communications are weaker than embodied IRs; to the extent that someone relies on mediated rather than embodied IRs, they are getting the equivalent of a weak drug high; so they increase their consumption to try to make up for the weak dosage. Here again is an area for research. New kinds of electronic media appear rapidly, and are greeted with enthusiasm when they first spread, hence most of what is reported about them is wild rhetoric. The actual effects on people’s experience of social interaction are harder to measure, and require better comparisons: people with different amounts of mediated communication, in relation to different amounts of embodied IRs (and at different levels of IR success and failure); and all this needs to be correlated with the outcome variables (solidarity, symbolism, etc.)

Theory of IRs is closely connected with sociological theory of networks. Networks are usually conceived on the macro or meso-level, as if it were an actual set of connecting lines. But seen from the micro-level, a connection or tie is just a metaphor, for the amount and quality of micro-interaction which takes place between particular individual nodes. What we call a “strong tie” generally means people who converse with each other frequently about important matters-- which is to say, people who frequently have successful IRs with each other. A “weak tie” is some amount of repeated contact, but with less strong solidarity and emotion--- i.e. moderate IRs. With this perspective in mind, let us consider two kinds of electronic network structures: those which are node-to-node (an individual sends a message to another specific individual-- such as email); and those which are broadcast, one to many (such as posts on a blog or social media site). Popular social media in recent years have created a type of network structure that is called “friends”, but which differs considerably from traditional friendships as taking place through embodied IRs.

Traditional embodied IRs can be one-to-one. This is typically what exists in the most intimate kinds of friendships, such as lovers, partners, or close friends. In Goffman’s terms, they share a common backstage, where the nuances and troubles of how they carry out frontstage social performances are shared in secret. In my formulation, close friends are backstage friends. An intermediate type of friend might be called a “sociable friend”, someone who meets with others in an informal group (such as at a dinner table or a party); here the conversation is less intimate, more focused on items of entertainment, or in more serious circles, discussing politics or work. Research on networks indicates that most people have a very few intimate friends (sharing backstage secrets), and perhaps a few dozen sociable friends.

What then is the status of “friends” defined as those with whom one exchanges posts on a social media site, typically with hundreds or thousands of persons? This is a broadcast network structure, not one-to-one; thus it eliminates the possibility of strong specific ties. In addition, because these interactions do not take place in real time, micro-coordination does not exist; no strong IRs are created. It is true that persons may post a good deal of detail about their daily activities, but this does not necessarily lead to shared emotions, at the intensity of emotional effervescence that is generated in successful IRs. Pending the results of more micro-sociological research, I would suggest that broadcast-style social media networks have generated a new category of “friendship” that is somewhere on the continuum between “sociable friend” [itself a weaker tie than backstage friend/strong tie] and “acquaintance” [the traditional network concept of “weak tie”]. The “social-media friend” has more content than an “acquaintance tie”, since the former gives much more personal information about oneself.

As yet it is unclear what are the effects of this kind of sharing personal information. The information on the whole is superficial, Goffmanian frontstage; one possibility that needs to be considered is that the social media presentation of self is manipulated and contrived, rather than intimate and honest. This is nothing new; Goffman argued that everyone in traditional face-to-face interaction tries to present a favorable image of oneself, although this is mostly done by appearance and gesture, whereas social media self-presentation is based more on verbal statements, as well as photo images selected for the purpose. One could argue that Goffmanian everyday life interaction makes it harder to keep up a fake impression because flaws can leak through one’s performance, especially as emotions are expressed and embarrassment may result; whereas a social media self-presentation gives more opportunity to deliberately contrive the self one wants to present. It is so to speak Goffmanian pseudo-intimacy, a carefully selected view of what purports to be one’s backstage.

It is true that young people often post things about themselves that would not be revealed by circumspect adults (sex, drugs, fights, etc.). But this is not necessarily showing the intimate backstage self; generally the things which are revealed are a form of bragging, claiming antinomian status-- the reverse status hierarchy of youth cultures in which official laws and restrictions are challenged. Nevertheless, talking about illicit things is not the same as intimate backstage revelation. To say that one has gotten into a fight can be a form of bragging; more intimate would be to say you were threatened by a fight and felt afraid, fought badly, or ran away. (The latter is a much more common occurrence, as documented in Randall Collins, Violence: A Micro-sociological Theory, Princeton Univ. Press, 2008.) To brag about one’s sex life is not the same as talking about the failures of a sexual attempt (again, a very common occurrence: David Grazian, On the Make: The Hustle of Urban Nightlife, Univ. of Chicago Press, 2008). The antinomian selves posted by many young people are a cultural ideal within those groups, not a revelation of their intimate selves. In fact research here would be a good site for studying the contrived aspect of the presentation of self.

Let us consider now the relationship between IR theory and social conflict; and ask whether the new electronic media change anything. Consider first the personal level, as individuals get into conflicts with other individuals, or small groups quarrel and fight each other. In the early days of the Internet, people used to frequently insult each other, in so-called “flame wars”. The practice seems to have declined as participation in the Internet has become extremely widespread, and people configure their networks for favorable contacts (or at least favorable pretences, as in spamming). Insulting strangers whom one does not know face-to-face fits quite well with the patterns of violent conflict [Collins, Violence]: violence is in fact quite difficult for persons to carry out when they are close together, and is much easier at a distance. Thus in warfare, artillery or long-distance snipers using telescopes are much more accurate in killing the enemy than soldiers in close confrontation. Contrary to the usual entertainment media mythology about violence, closeness makes antagonists incompetent; they often miss with their weapons even if only a few meters away, and most antagonists are unable to use their weapons at all.

I have called this emotional pattern “confrontational tension/fear”, and have argued that this difficulty in face-to-face violence comes from the fact that violence goes against the grain of IRs. Humans are hard-wired in their nervous systems to become easily entrained with the bodily rhythms and emotions of persons they encounter in full-channel communication; hence the effort to do violence cuts across the tendency for mutual rhythmic coordination; it literally produces tension which makes people’s hands shake and their guns not to shoot straight. Professionals at violence get around this barrier of confrontational tension/fear by techniques which lower the focus of the confrontation: attacking their enemy from the rear; or avoiding the face and above all eye contact, such as by wearing masks or hoods.

Thus, to return to the case of conflict over the Internet, it is easier to get into a quarrel and to deliver insults from a distance, against a person whom you cannot see. Internet quarrels also have an easy resolution: one simply cuts off the connection. This is similar to conflict in everyday life, where people try to avoid conflicts as much as possible by leaving the scene. (The style of tough guys who go looking for fights applies only to a minority of persons; and even the tough guys operate by micro-interactional techniques which enable them to circumvent confrontational tension, especially by attacking weak victims. The fearless tough guy is mostly a myth.)

On the individual level, then, electronic media generally conform to larger patterns of conflict. What about on the level of small groups? Little groups of friends and supporters may get into conflicts in a bar or place of entertainment, and sometimes this results in a brawl. The equivalent of this in the electronic media seems hardly to exist. There are fantasy games in which the player enacts a role in a violent conflict--- but this is not a conflict with other real people; and furthermore it is in a contrived medium which lacks the most basic features of violence, such as confrontational tension/fear. Violent games only serve to perpetuate mythologies about how easy violence really is. In my judgment, such games are more of a fantasy escape or compensation for the real world than a form of preparation for it.

It is not clear from sociological evidence that gangs use the social media much. A major component of the everyday life of a criminal gang is the atmosphere of physical threat. Although gang members do not engage in a lot of violence statistically-- contrary to journalistic impressions, murders even in very active gang areas hardly happen at a rate of about 1 per 100 gang members per year [evidence in Collins, Violence p. 373]-- but gangs spend a great deal of time talking about violence, recalling incidents, bragging and planning retaliation. Moreover, gang members have territory, a street or place they control; they must be there physically, and most of their contacts are with other persons in their own gang, or its immediate surroundings. Gang members are very far from being cosmopolitans, and do not have wide networks. Studies of network usage rarely show gang members involved. (There are some incidents of gangs monitoring a neighbourhood information network, to see who is away from home so that their houses could be burglarized; but this is more in the nature of using the Internet to locate victims, rather than for ties within the gang.) I would conclude that gangs are too concerned about maintaining a high level of solidarity inside their group, and with physical threat against outsiders, to be much concerned with weak-IR media.

Let us consider another level of conflict, that involving official or formal organizations. On one side are hackers, individuals who use their electronic expertise to hack into an organization, either purely for the sake of disruption, or for financial gain. Sociologists and criminologists know relatively little about hackers. They do not appear to be the same kinds of people who belong to gangs; as indicated, gangs are very concerned about their territorial presence, and are most concerned to fight against rival gangs; hackers seem to be from a different social class and are more likely to be isolated individuals. (This needs investigation-- do hackers connect with each other via the internet? Are they underground groups of close friends? Some are probably more isolated than others; which type does the most hacking and the most damage?)

On the other side, officials also use electronic media to attack and counter-attack. Leaving aside the issue of how organizations defend themselves against hacking and cyberwar, the point I want to emphasize is that official agencies of control have an abundance of information about individuals who most use electronic media. Especially social networking sites, where young people post all sorts of information about themselves, are vulnerable to police, as well as employers and investigators; as many naïve youth have discovered to their disadvantage, their antinomian bragging can get them disqualified from jobs, or even arrested (for example, by contact with forbidden porn sites.). Sociologically, it is best to conceive of the electronic media as a terrain on which conflict can take place between different forces. For many people, especially youth in the first flush of enthusiasm for new possibilities of connections and self-presentation, the electronic media seem to be a place of freedom. But this depends on the extent to which official agencies are constrained from invading the same media channels in search of incriminating information. Here the electronic media have to be seen in the perspective of surrounding social organization: political and legal processes influence how much leeway each side of the conflict has in being able to operate against the other.

The technology of the media is not a wholly autonomous force; it is chiefly in democracies with strong legal restrictions on government agencies that the electronic media give the greatest freedom for popular networks to operate. It is sometimes argued that network media favor social movements, allowing them to mobilize quickly for protests and political campaigns; thus it is claimed that the network media favor rebellions against authoritarian regimes such as China or Iran. But these same cases show the limits of electronic networks.

One weakness is that networks among strangers are not actually very easy to mobilize; social movement researchers have demonstrated that the great majority of persons who take part in movements and assemble for demonstrations do not come as isolates, but accompanied by friends; a big crowd is always made up of knots of personal supporters. It is this intimate structure of clusters in the network that makes political movements succeed; and their lack makes them fail. Thus electronic media are useful for activating personal networks, but are not a substitute for them. (This parallels Ling’s conclusion about mobile phone: that they supplement existing personal contacts rather than replacing them.)

A second weakness of electronic networks for mobilizing political protests is that a sufficiently authoritarian government has little difficulty in shutting down the network. China and Iran have shown that a government can cut off computer servers and mobile phone connections. The more democratic part of the world can protest; and the commercial importance of the Internet gives the protests some economic allies. But mere disapproval from the outside has not been a deterrent for authoritarian regimes in the past. It is not at all impossible that a Stalinist type of totalitarian dictatorship could emerge in various countries. The multiple connections of the electronic media would not prevent such a development; and indeed a determined authoritarian government would find the Internet a convenient way of spying on people. Especially as the tendency of technology and capitalist consolidation in the media industries is to bring all the media together into one device, it would be possible for government super-computers to track considerable details about people’s lives, expressed beliefs, and their social connections. In George Orwell’s Nineteen Eighty-four (published in 1948), the television set is not something you watch but something that watches you, at the behest of the secret police. The new media make this increasingly easier for a government to do. Whether a government will do this or not, does not depend on the media themselves. It is a matter for the larger politics of the society. In that respect, too, the findings of sociology, both for micro-sociology and macro-sociology, remain relevant for the electronic network age of the future.

I will conclude with an even more futuristic possibility. Up to now, the electronic media produce only weak IRs, because they lack most of the ingredients that make IRs successful: bodily presence is important because so many of the channels of micro-coordination happen bodily, in the quick interplay of voice rhythms and tones, emotional expressions, gestures, and more intense moments, bodily touch. It is possible that the electronic media will learn from IR theory, and try to incorporate these features into electronic devices. For instance, communication devices could include special amplification of voice rhythms, perhaps artificially making them more coordinated. Persons on both ends of the line could be fitted with devices to measure heart rate, blood pressure, breathing rate, perhaps eventually even brain waves, and to transmit these to special receivers on the other end-- individuals would receive physiological input electronically from the other person into one’s own physiology. Several lines of development could occur: first, to make electronic media more like real multi-physiological channel IRs; hence mediated interaction would become more successful in producing IRs, and could tend to replace bodily interaction since the latter would no longer be superior. Second, is the possibility of manipulating these electronic feeds, so that one could present a Goffmanian electronic frontstage, so to speak, making oneself appear to send a physiological response that is contrived rather than genuine. Ironically, this implies that traditional patterns of micro-interaction are still possible even if they take place via electronic media. More solidarity might be created; but also it might be faked. Interaction Rituals have at least these two aspects: social solidarity, but also the manipulated presentation of self. The dialectic between the two seems likely to continue for a long time.

Free-will is long-standing philosophical question. Although often regarded as intractable, the issue becomes surprisingly clear from the vantage point of micro-sociology, the theory of Interaction Ritual Chains. Every aspect of the free-will question is sociological. Will exists as an empirical experience; free will, however, is a cultural interpretation placed upon these experiences in some societies but not in others. Since both the experience of will, and the cultural interpretations, vary across situations, we have sociological leverage for showing the social conditions that cause them. I will conclude by arguing that our goal as sociologists is to explain as much as we can, and that means a deterministic position about will. Nevertheless, not believing in free will does not change anything in our lives, our activism, or our moral behavior.

I will not concentrate on philosophical arguments regarding free-will. A brief summary of the world history of such arguments is in the Appendix: The Philosophical Defense of Free Will. Its most important conclusion is that intellectuals in Asia were little interested in the topic; it had a flurry of discussion in early Islam, but then orthodoxy decided for determinism; free will was chiefly a concern of Christian theologians, and has become deeply engrained in the cultural discourse of the modern West.

One philosophical point is worth making at the outset, in order to frame the limits of what I am discussing. Most philosophical argument takes the existence of free-will as given, and concentrates on criticizing viewpoints which might undermine it. It is notoriously difficult to say anything substantive about free-will itself, and indeed it is defined mainly by negation. The same can be said about the larger question of determinacy and indeterminacy; most argument is about the nature and limits of determinacy, with indeterminacy left as an unspecified but often militantly supported residual. I will bypass the general question of determinacy/indeterminacy, with only the reminder that the indeterminist position in general does not necessarily imply free-will. A universe of chance or chaos need not have any human free-will in it. One long-standing philosophical argument (shared by Hume and J.S. Mill) is that for free-will to operate, there must also be considerable determinacy in the world, otherwise the action of the human will could never be effective in bringing about results. Some intellectuals today believe that sociology cannot or should not try to explain anything, since the social world is undetermined. If so, they should recognize they are undermining the possibility of human agency they so much admire. In any case, what I am concerned with here are the narrower questions: what is will; and why some people think it is free.

Three Modern Secular Versions of Free Will

There are three main variants of what we extol as free-will.

First, the individual is held to be responsible for his or her acts. This is incorporated in our conception of a capable adult person, exercising the rights of citizenship and subject to constraints of public law. Above all, the concept of free will is embedded in modern criminal law; for only if someone is responsible for his/her actions is it considered just to impose a criminal punishment. Similarly in civil law the notion of the capability to make decisions is essential for the validity of contracts. This is characteristic of modern social organization and its accompanying ideology: individual actions are to be interpreted in terms of the concept of free will if these institutions are to operate legally.

Responsibility in the public sense is related to self-discipline in the private sphere. Will-power is when you "resist temptation," keeping away from the refrigerator when on a diet, forcing yourself to exercise to stay in shape, doing your work even though you’d rather not. In both public and private versions, free-will of this sort is actually a constraint. You might want to do something differently, but you pull yourself together, you obey the law, you do what you know you should. One might describe this with a Freudian metaphor as "superego will"; under another metaphor, "Weberian Protestant Ethic will". Such will power has a psychological reality: the feeling of putting out effort to overcome an obstacle, the feeling of fighting down temptation. But is it free, since it so obviously operates as a constraint, and along the lines of the official standards of society?

A second, almost diametrically opposed notion of free-will is spontaneity or creativity. Here we extol the ability to escape from socially-imposed patterns, to throw off the restraints of responsibility, seriousness and even morality. This is private will in opposition to official will, or at least on holiday from it. This could be called "Nietzschean will", or in Freudian metaphor, "Id-will". Again one could question its freedom. The Freudian metaphor implies that such will is a drive, perhaps the natural tendency of the body to fight free of constraints and pursue its own lusts. Schopenhauer, whose metaphysics rests on the will as Ding-an-sich, explicitly saw will as driven rather than as free. Neither Greek nor Christian philosophy would regard spontaneity-will as free, but as bondage to the passions. The value of spontaneity is a peculiarly modern one, connected with romanticism and counter-culture alienation from dominant institutions.

A third conception of will is reflexiveness: the capacity to stand back, to weigh choices, to make decisions. Reflexive deliberation no doubt exists, among some people at some times. Some philosophical and sociological movements give great emphasis to reflexivity (including existentialism, ethnomethodology, post-modernism), but apart from intellectual concerns, it is not clear how much reflexivity there is in everyday life.

The three types of free-will are mutually opposed to each other; they are all distinctively Western and modern; and they all have moral loadings of one kind or another. It is easy to find a social basis for all three components: for moral ideals and commitments to self-discipline; for feelings of spontaneous energy; and for reflexive thinking. All three can be derived from the theory of Interaction Rituals.

Interaction Rituals Produce Varying Emotional Energy, the Raw Experience of Will

The basic mechanism of social interaction is the Interaction Ritual (IR). Its ingredients are assembly of human bodies in the same place; mutual focus of attention; and sharing a common mood. When these ingredients are strong enough, the IR takes off, heightening mutual focus into intersubjectivity, and intensifying the shared mood into a group emotion. Voice and gesture become synchronized, sweeping up participants into rhythmic entrainment. Successful IRs generate transituational outcomes, including feelings of solidarity, respect for symbols recalling group membership, and most importantly for our purposes, emotional energy (EE). The person who has gone through a successful ritual feels energized: more confident, enthusiastic, proactive. Rituals can also fail, if the ingredients do not mesh into collective resonance; a failed ritual drains EE, making one depressed, passive, and alienated. Mediocre IRs result in an average level of EE, bland and unnoticed.

EE is the raw experience that we call “will”. It is a palpable feeling of body and mind; “spirit” in the sense of feeling spirited, in contrast to dispirited or downhearted (among many metaphors for high and low states of EE). When one is full of emotional energy, one moves into action, takes on obstacles and overcomes them; the right words flow to one’s tongue, clear thoughts to one’s head. One feels determined and successful. But will is not a constant. Some people have more of it than others; and they have more of it at some moments than other times. The philosophical doctrine that people always have will is empirically inaccurate. And precisely because it does vary, we are able to make sociological comparisons and show the conditions for high, medium, or low will power.

Persons participating in a successful IR generate more EE, more will. Thus one dimension of variation is between persons who have a steady chain of successful IRs as they go through the moments of their days, and those who have less IR success, or no success at all. I have called this process the market for interactions; persons do better in producing successful IRs when they are able to enter bodily assemblies and attain mutual focus and shared mood; this in turn depends on cultural capital and emotions from prior interactions. IR chains are cumulative in both positive and negative directions; persons who are successful in conversations, meetings and other shared rituals generate the symbolic capital and the EE to become successful in future encounters. Conversely, persons who fail in such interactions come out with a lack of symbolic capital and EE, and thus are even less likely to make a successful entry into future IRs.

A second dimension of relative success in IRs comes from one’s position inside the assembled group: some are more in the center of attention, the focal point through which emotions flow; such persons get the largest share of the group’s energy for themselves. Other persons are more peripheral, more audience than a leader of the group’s rhythms; they feel membership but only modest amounts of EE. Qualities traditionally ascribed to “personality” or individual traits are really qualities of their interactional position.

IR chains tend to be cumulative, making the EE-rich richer still, and the EE-poor even less energized. But the EE-rich may stop rising, and even fall, depending on the totality of conditions for IRs around them. Extremely high-EE individuals have a trajectory that makes them the center of attention, not just in small assemblies such as two-person conversations, but the orator or performer at the center of crowds. Persons who channel the emotions of large crowds by putting him/herself in the focus of everyone’s attention are variously called “hero”, “leader”, “star”, “charismatic”, “popular”, etc. But charisma can fall, if crowds no longer assemble, or their attention is diverted by other events or by rival leaders. The strongest focus of group attention happens in situations of conflict, and it is in periods of danger and crisis that charismatic leaders emerge; but a conflict can be resolved, or the leader’s efforts to control the conflict may fail. Thus the social structure of conflict, which temporarily gives some individuals high EE, can also deprive them of those conditions and hence of EE.

In a conflict, will power consists in imposing one’s trajectory upon opponents; in Weber’s famous definition, power is getting one’s way against others’ resistance. This is true on the micro-level of individual confrontations in arguments and violent threats [how this is done is documented in Violence: A Micro-Sociological Theory]. Conflict is a distinctive type of IR in which someone’s EE-gain is at the expense of someone else’s precipitous EE-loss. In the conflict of wills, some find circumstances that give them even more will power, while others lose their will. Sociologically, will power is not merely one’s own; not an attribute of the individual, but of the match-up of all the individuals who come together in an IR. Religious and philosophical conceptions of will, abstracted from the real social context in which it is always found, create a myth of the individual will.

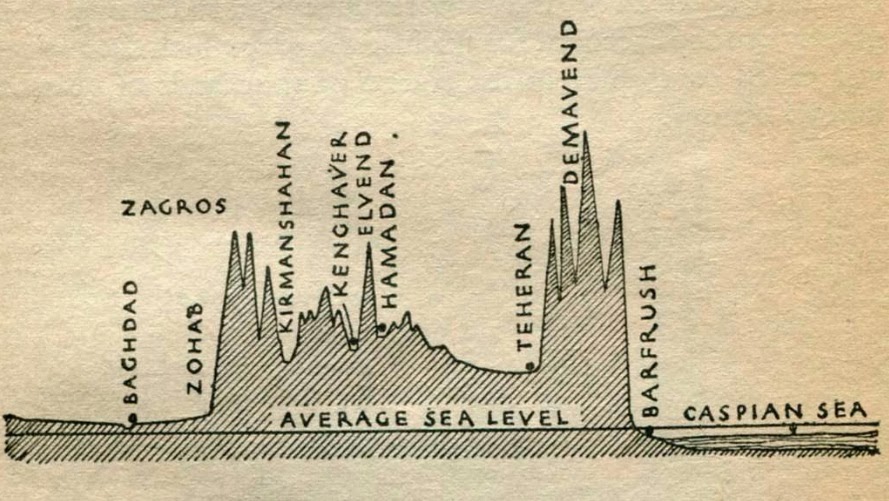

Famous generals, politicians, social movement leaders are lucky if they die at the height of their energy; many fade away when the crowds no longer assemble for them, or they can no longer move the crowd. Napoleon, during his meteoric career as victorious general, government reformer and dictator, was noted for his extreme energy: taking on numerous projects, inspiring his followers, moving his troops faster than any opponent; he was known for sleeping no more than a few hours a day, in snatches between action. He got his EE by being constantly in the center of admiring crowds, in situations of dramatic emotion, focusing the energy of military and political organization around himself. Yet when Napoleon was finally defeated and exiled to a remote island, he lasted only 8 more years, dying at the age of 53. [Felix Markham, Napoleon] The swirl of crowd-focused emotion that had sustained him was gone; the interactional structure that had given him enormous powers of will in far-flung organization now deserted him, leaving him fat and indolent, eventually without will to live.

Naïve hero-worship ignores the interactional structures that produce high-EE individuals in moments of concentrated assembly and social attention. Will is socially variable; and the IR patterns that give large amounts of will to some few individuals thereby deprive many others of having similar amounts of EE. Very few can be in the center of big crowds. Will power is not entirely a zero-sum game, since successful IRs can energize everyone, in degree, who takes part in the enthusiastic gathering. But such hugely energizing gatherings are transitory; and moments of collective will become ages of remembered glory, because they are rare.

When is EE Experienced as “Free Will?”

EE is a real experience, and thus will power is an empirically existing phenomenon. One mistake is to interpret will experiences as solely a characteristic of the individual. As many philosophers have noted from Hobbes (1656) to O'Shaughnessy (The Will, 1980), you cannot will to will. The sociological equivalent is: the structure of social interaction, as you move through the networks in an IR chain, determines how much emotional energy you have at any given moment, hence how much will.

Another mistake is to identify “will” with “free will.” Across world history, experiences of EE are sometimes interpreted as free will, sometimes not.

Julius Caesar had a high level of EE. During his political career and military campaigns he was extremely energetic: fast-moving, quick to decide on a plan of action, confident he could always lead his men through any difficulty. Like Napoleon, he needed little sleep, and carried out multiple tasks of organizing, negotiating, dictating messages incessantly even while traveling by chariot. In combat, he wore a scarlet cape, letting enemies target him because it was more important to make himself a center of inspiration for his troops. His EE came from techniques not only of putting himself in the center of mass assemblies, but in dominating them. A telling example occurred during the civil war when he received a message that his troops were mutinous for lack of pay. Although they had attempted to kill the officers Caesar sent to negotiate with them, he boldly went to the assembly area and mounted the speaking platform. The troops shouted out demands to be released from their enlistments. Without hesitation, Caesar replied, “I discharge you.” Thousands of soldiers, taken aback, were silenced. Caesar went on to tell them that he would recruit other soldiers who would gain the victory, then turned his back to leave. The soldiers clamored for Caesar to keep them in his army; in the end, he relented, except for his favorite legion, whose disloyalty he declared he could not forgive. [Appian, The Civil Wars; see also Caesar, Gallic Wars] Caesar was famously merciful to those he defeated, but he always reserved some for exemplary punishment. Caesar’s success as a general depended to large extent on being able to recruit soldiers, including taking defeated soldiers from his opponents into his own army. Thus his main skill was the social technique of dominating the emotions of large assembled groups. He was attuned in the rhythm of such situations, playing on the mood of his followers and enemies, seizing the moment to assert a collective emotional definition of the situation.

In the language of modern hero-adulation, we would say Caesar was a man of enormous will power. But ancient categories of cultural discourse described him in a different way. Caesar was regarded as having infallible good luck-- whenever disaster threatened, something would turn up to right the situation in his favor. This something was no doubt Caesar’s style of seizing the initiative and making himself the rallying point for decisive action. But the ancient Romans had no micro-sociology; nor did they have a modern conception of the autonomous individual. Caesar was interpreted as possessing supernatural favor-- the kind of disembodied spirit of fate that augurs claimed to discern in flights of birds or the organs of animals in ritual sacrifices. In the ancient Mediterranean interpretive scheme, outstanding individuals were explained by connection to higher religious forces. Religious leaders were interpreted as mouthpieces for the voice of God. Hebrew prophets, pagan oracles, as well as movement leaders such as John the Baptist, Jesus, Mani, and Muhammad, were charismatic leaders in just the sense described in IR theory: speakers with great resonance with crowds of listeners, able to sway their moods and impose new directions of action and belief. But instead of regarding these prophets and saviors as possessing supreme will of their own, all (including in their own self-interpretation) were held to be vessels of God-- “not my will, but Thy will be done.”

EE arising from being in the focal point of successful IRs has existed throughout world history. When does this EE become interpreted as will inhering in an individual? And when does it become interpreted as “free will”? I have noted three species of “free will” recognized in the Christian/post-Christian world: self-disciplined will against moral temptations; spontaneous will against external restraints; and reflexivity in considering alternatives. All of these have the character of a conflict between opposing forces inside an individual. Free will is not just will power in the sense of Caesar or Napoleon being more energetic and decisive than their enemies, and imposing their will upon their troops and followers. Free will is not Jesus or Muhammad preaching moving sermons and inspiring disciples. These are phenomena of EE, arising out of a collective IR, in which the entire group is turned in a direction represented by the leader at the focal point of the group. Although such EE is the raw material of experiences that can be called “will”, it does not inhabit the conceptual universe in which the issue of “free will” arises.

Micro-sociologically, free will is an experience arising where the individual feels opposing impulses within him/herself. Consider the scheme of self-disciplined free will, doing the right thing by rejecting an impulse to do the wrong thing. Such conflict is serious when both impulses have strong EE; you want to drink, take drugs, have sex, steal or brawl; but another part of yourself says no, that is the way of the devil (immoral, unhealthy, illegal, and other cultural phrasings). In terms of IRs, on one side are interactional situations of carousing, drug-taking, hanging out with criminals, etc; and this IR chain has been successful in generating enthusiasm, appetite, drive for those desired things. [As argued in Interaction Ritual Chains, addiction, sex and violence are not primordial drives of the unsocialized human animal, but social motivations, forms of EE developed by successful IRs focusing on these activities.] These desires set up a situation for free will decision-making when there is another chain of experiences-- such as religious meetings, rehab clinics, etc.-- which explicitly focus their IRs on rejecting and tabooing such behaviors. IRs generate membership and thereby set standards of what is right; simultaneously they define what is outside of membership, and hence is wrong.

Conflicts inside an individual which set up the possibility of the free will experience must come from a complex social experience. Individuals are exposed to antithetical IR chains, some of which generate the emotional attractiveness of various kinds of pleasures; others which generate antipathy to those pleasure-indulging groups. In an extremely simple society, such conflicts do not arise. In a tribal group where everyone participates in one chain of rituals, there is no conflict between rival sacred objects; no splits between different channels of EE for individuals. The individual self in such a network structure would simply internalize the symbols and emotions of a single group; there would be no divided self, no inner conflict, and no occasion for free will.

Self-discipline will can exist only when individuals participate in rival, successful IRs. Self-discipline as a moral choice probably originated in religious movements of conversion. Such movements are first found in complex civilizations; not earlier, since a tribal religion is not an option one joins, but a habitual framework of rituals that structures the activities of the tribe. Religious movements that recruit new followers, pulling them out of household and family-- for instance into a church that shall be “father and mother in Christ”-- put the emphasis on individuals, by their mode of recruitment, extracting persons from unreflective collective identities, and requiring them to make a deliberate choice of membership. This is one source of the conception of individuality, which grows up especially strongly in the Christian tradition. The emphasis on the moral supremacy of free will is heightened when there are rival movements, each seeking to convert followers. Augustine, around 400 A.D., is one of the first theologians to emphasize free will as superior to the intellect, since will is one’s power to choose among alternatives; in his autobiographical Confessions, he dramatizes his moments of rejecting his early carousing, and his conversion from the rival sect of Manicheans.

The doctrine of free will as a choice of the good against evil privileges key life-events, the moment of conversion from one intense IR community to another. But once established in a new IR chain of righteous rituals, there is relatively little tension, hence little of the peak experience of choosing one against the other. The choice of God and the righteous life can be kept in people’s consciousness in a pro forma way by sermons on the topic; and more strongly by church practices which raise the level of tension artificially, by preaching about the danger of temptation and back-sliding at any moment in one’s life, hence the need for continued vigilance and self-discipline. Missionary activities, by attempting to convert others, also ensure a chain of IRs focused on the boundary between the group of the righteous and that of the non-righteous. Revival meetings, where individuals are oratorically called to come forward in public to repent and be saved, use an large-scale IR to repeat the image of the fateful decision; here the repentant sinner becomes an emblem kept before the eyes of church members even if most of them are no longer wielders of free will but merely an audience.

Free will, as an act of self-discipline, is a cultural concept arising from the social experience of choosing the cult of the good against the cult of the bad. It is socially constructed above all by Christianity, as a religious of public conversion in mass assemblies. In secular, post-Christian society, this conception of free will has weakened, although structurally similar practices have carried over into the methods of rehabilitation programs and applied psychology. Health-conscious persons invoke will power to keep themselves from over-eating, or to promote exercising; even here, the source of self-discipline typically comes from social organization-- the diet counselors, gym or exercise group which carries out IRs around the sacred object of health. Something approaching the intensity of religious rituals, with their righteous subordination of wrong behavior to right action, is found in political and social movements, especially those that gather for confrontational demonstrations that generate strong emotions. It is here that the secular conception of “free will”-- now sociologically labeled “agency”-- continues to extol the self-disciplined pursuit of group-enforced higher ideals, against the selfish pleasures of the non-righteous.

The major innovation in conceptions of free will dates from the turn of the 19th century, and becoming prominent after 1960. I called this spontaneity-will. It derives from the same antithesis as self-discipline will, but reverses the moral emphasis: official society now becomes the dead hand of coercion and emotional repression. Instead of converts pulling themselves up from the gutter into respectable society, its image is the neurotically self-controlling individual breaking free of convention, into spontaneity and freedom. Since the time of the Romanticist movement, and continuing through Freudian therapy and 20th century movements for sexual liberation, the emphasis has been on pleasure, precisely because it was prohibited. In the late 20th century, counter-culture movements idealized moments of intoxication-- whether from drugs, carousing, dancing, sex, or fighting. This has a structural base in its own IRs, especially the large popular music concert; in some styles, its IR locus is an athletic event, a clash of fans, or a gang confrontation.

Counter-culture conceptions of spontaneity are a type of EE, generated by mass IRs. The social technology of putting on successful IRs has changed throughout history; mass spectator sports and popular music gatherings are inventions, elaborated especially in the 20th century, for generating moments of high collective effervescence. Sound amplification, antinomian costumes, mosh pits, light shows, and other features enhance the basic IR ingredients of assembly, focus, shared emotion, and rhythmic entrainment. The ideology that goes along with the social technology of mass entertainment IRs is the ideal of opposition to the official demands and duties of traditional institutions. Rebellion-- or at least a break in the routinized conformity of straight society-- becomes the current ideal of free will.

Here again, the experience of “freedom” that individuals have depends on how much tension is felt between rival IRs. It is at the historical moment when a new feature of counter-culture rituals are created that there is the strongest sense of breaking away, of liberating oneself from traditional controls. Pop concerts, like sporting events and gang fights, can become routinized; they pay homage to an image of themselves as spontaneous and antinomian, even when they become local cultures of conformity. Analytically, the process is parallel to the self-discipline will of the old Christian/post-Christian tradition of conversion from evil to good: intense moments of choice are rare in people’s lives, but ritual re-enactment of the ideals of self-discipline found ways of keeping the drama before people’s eyes. In the cult of spontaneous will, early moments of rebellion are re-enacted in institutionalized form.

To summarize: the experience of will is real. It exists wherever successful IRs generate EE. Historically, those individuals who were socially positioned to have the most EE, generally were not culturally interpreted as exercising free will, but were glossed with some depersonalized label, usually supernatural. The cultural category of free will was invented for moments of choice by individuals, in abjuring particular kinds of EE-generating IRs (those considered self-indulgently pleasurable), in favor self-disciplining rejection of temptation. Following centuries of dominance by Christian and post-Christian disciplinary regimes, movements became prominent by the late 20th century, based on new mass-entertainment IRs, with an ideology defining freedom as the rejection of self-discipline. The fact that the two conceptions of free will are diametrically opposed seems ironic, but it comes from the conception of free will as a choice between two impulses within the self. Both sides of the choice are attractive-- and hence generate enough tension to make it a dramatic choice-- because each is grounded in successful IRs creating their own form of EE which individuals carrying within themselves. Ideologies, historically fluctuating as they are, can seize on either side of the conflict and extol it with those words of high moral praise as “free will”.

Reflexiveness and the Sociology of Thinking

What people think and when they think it is also determined by micro-sociological conditions. I have made this argument in detail in The Sociology of Philosophies and in Interaction Ritual Chains, chapter 5; here I will summarize key points bearing on free will.

Thought is internalized conversation; conversation is a type of IR, in which words and ideas become symbols of social membership. Successful IRs charge up symbols with EE, so that they come more easily to mind. Unsuccessful conversations deflate the symbols used in them, so they become harder to think with.

This is easiest to document for intellectual thinking, since the most successful thoughts come out in texts. So-called creative intellectuals-- those who produce new ideas that become widely circulated-- have distinctive network patterns, close to intellectuals who were successful in the previous generation, and close to intense arguments of new movements of intellectuals. What makes someone creative is to start by participating in successful IRs on intellectual topics, so that they take these ideas especially seriously, and internalize them. Since ideas represent membership in the groups who use them, intellectuals’ own thinking reproduces the structure of the network inside their minds. Such a person creates new ideas by recombining older ideas, in various ways: translating ideas to a higher level of abstraction; reflexively questioning them; negating some ideas and redoing the resulting combination; applying existing ideas to new empirical observations. These techniques for creating new ideas out of older ideas are also learned inside the core intellectual networks. Creative intellectuals learn from their network, not only what to think but how to think creatively-- they learn the art of making intellectual innovations.

Critics sometimes boggle at the sociological point, that creativity itself is socially determined-- surely, doesn’t creativity mean something new, that didn’t exist before? But just because something is new does not mean we can’t find social conditions under which it appears; and when we examine it-- as in studying the history of philosophy or other fields-- the new is always a rearrangement of older elements. Ideas that have no point of contact whatsoever with previous ideas would not be recognized by anyone else, and would not be transmitted.

There is a sociology of creativity, and it does not require the concept of free will. Will, yes-- the famous intellectuals are full of EE; I have called them energy stars. They exemplify the sociology of EE that comes from being at the core of networks of successful IRs, in this case, intellectual IRs.

Turn now to the paradigm of free will as reflective thinking, not for intellectual innovation, but for ordinary life-decisions. Just because someone thinks about alternatives does not mean that what they think is undetermined, an act of free will, beyond explanation. Psychological experiments show that people have typical biases in making choices; these are so-called non-rational choice anomalies [Kahneman, Slovic and Tversky, Judgment Under Uncertainty, 1982], since most persons do not think the way an economist says they should be calculating. Persons who have economics training, however, do think with more of the prescribed methods of calculation. What this shows is not that economists have more free will, but rather that they follow a disciplinary paradigm, a type of social influence.

The classic free will model of thinking is: someone brings together alternatives and decides among them. Thinking mostly is carried out in sequences of words; and these phrases have a history in previous conversational IRs with others. [I neglect here thinking in images and non-verbal formulations; most likely, such thinking is even more clearly determined by socially-based emotions than verbal thinking.] Hence most of the time we don’t really weigh alternatives: some ideas are already much more charged with EE than others. Some thoughts pop into one’s head and dominate the internal conversation. Internal thinking thus reproduces the social marketplace of IRs.

Sometimes a decision is genuinely hard because alternatives on both sides are equally weighted by prior IRs. This can happen in two ways. In one version, you have been in strongly focused rival camps that think very differently, and so there are two successful IRs chains pitted against each other. This is a situation of anguished decision-making, because symbols for both alternatives are highly charged and vie for attention in your thoughts. In other cases, the rival ideas are not very intense, because the situations in which they emerged were weak or failed IRs. Such ideas are hard to grapple with, hard to keep in mind; an attempted decision would be a vague and unfocused experience, maundering rather than decisive.

The practical advantages of free will, in the philosophical paradigm, are grossly exaggerated. On the whole, it is the individual who is not caught up in reflexivity, but who seizes the moment and throws him/herself into the emotional entrainment of the relevant IRs, who becomes the political leader, the financial deal-maker, the irresistible lover. Ironically, it is just those persons who manifest the least freedom in the philosophical sense who are extolled in our public ideology as the controllers of destiny.

It should not be taken for granted that just because someone can pose a choice between alternatives in the mind-- the archetypal situation imaged by philosophers-- that they will actually come to a decision. We lack sufficient research on what people actually experience, but no doubt on many occasions the decision-making process fails; he/she vacillates, is stuck, paralyzed in indecision. Micro-sociological indications are that high levels of self-consciousness about choices leads to irresolvable discussions, and thus either to paralysis or to a leap back into stream of unreflective routine or impulse. Garfinkel's breaching studies [Studies in Ethomethodology,1967] which force people into reflecting upon taken-for-granted routines show them floundering like fish out of water, and indeed placing considerable moral compulsion upon each other to get back into the unreflective methods of common-sense reasoning.

Norbert Wiley [The Semiotic Self, 1994] proposes that the parts of the self can mesh into harmonious internal IRs, creating solidarity among the parts of the self. When this happens, there is a feeling of decisiveness, what I would call self-generated EE, and thus the experience of “will”. In other social configurations, the parts of the self fail to integrate into internal IRs; Wiley notes that this is the process of mental illness, in extreme cases of dissociation among parts of the self, schizophrenia. At less intense levels of disharmonious inner conversations, the result may be described simply as a lack of will. Once again we see that all the experiences that we call will or free will vary by social conditions.

Whatever sociologists can study empirically, we can explain, by making comparisons. Research on internal conversation or inner dialogue is now proceeding, by various methods [e.g. Margaret Archer, 2003, Structure, Agency and the Internal Conversation; in some cultural spheres, such inner experience is socially shaped as prayer: Collins, 2010. “The Micro-Sociology of Religion.” Association of Religion Data Archives Guiding Papers. http://www.thearda.com/rrh/papers/guidingpapers.asp]. We will learn more about the conditions under which people have internal IRs that succeed and fail, and thus produce inner, self-generated EE.

What is Inconsistent about Denying Free Will?

Among intellectuals, philosophers have usually felt that denial of free will is incompatible with other necessary philosophical commitments. I deny this incompatibility. Recognizing a complete sociological determinism of the self changes nothing in everyday life, or in politics or in social movements, nor does it introduce any logical or empirical inconsistency.

Recognizing sociological determinism of emotional energy, of the structural constraints of all interactional situations, and of the network structure that surrounds us, does not make any of these phenomena less real. We are still subject to the up and down flows of emotional energy. Recognizing my own flows of emotional energy does not make me any less likely to join in a political movement, to become angry about a legal injustice, or to perform any other action that our networks make available. How could it be otherwise, since all instances of what are regarded as "free will" are also phenomena of EE arising at particular points in the network of social interactions?

A traditional philosophical argument is that people who do not believe in free will are fatalistic, passive, and lethargic; instead of doing good and improving the world, they loll around indulging in immoral pleasures or mired in poverty. This is a purely hypothetical argument, with no evidence to back it up. Historically, most people in most civilizations did not believe in free will; nevertheless, people in ancient Greece, Rome, China, the Islamic world, and elsewhere had emotional energy just as in the Christian West, and produced a great deal of political and economic action. The argument that we are superior movers of world history because of our free will conception is just another instance of cultural bias.

Is there an inconsistency, then, purely at the level of concepts? There is no inconsistency in the following statements: It is socially determined that people in some networks feel it is morally right to punish criminals harshly, and that people in other networks feel it is morally right to be lenient. Believing in free will is determined. Not believing in free will is determined. Feeling individually responsible (in a given social structure) is determined. Feeling collectively responsible (in a different social structure) is determined. Feeling alienated and irresponsible is socially determined (in the counter-culture groups of the last half century).

We may feel that it is unjust to be punished if one does not have free will. But this is not a logical inconsistency. It is consistent to recognize that the actions of punishment are as much socially determined as the actions which are being punished. If we call this unjust, then injustice is determined. It is also consistent to recognize social circumstances which lead some groups to attempt to eliminate punishments; whether such movements succeed or fail is also socially determined. There is no logical inconsistency in this. It offends our folk methods of thinking about our own moral and political responsibility, but those ways of thinking are also socially determined. Feeling offended by sociological analyses such as this is socially determined.

The final step is to fall back on epistemology, and to claim that social determinism undermines knowledge and therefore undercuts itself. Again I argue that this does not follow. A theory is true or not depending on the condition of the world, however one arrived at the theory. Symbols have reference as well as sense; discussions of truth belong to the former; the social construction of thinking belongs to the latter. Granted, I have not guaranteed that my theory of will is true; but it is consistent with a widely applicable theory of interaction grounded in a full range of micro evidence and historical comparisons. Social determinism, extending even to the sociology of the intellectual networks that produces such theories as this, does not imply that it must be false. It is only a prejudice that theories must somehow exist independently of any social circumstances if they are to be true. To argue thus is to take truth as a transcendent reality in the same unreflective sense as we take our popular conception of "free will."

To insist on the ontological reality of free will has been the source of philosophical inconsistencies. Philosophers have been willing to accept the inconsistencies, just as theologians retreated to the mysteries of faith, because of extra-intellectual commitments. We will have those extra-intellectual commitments in our lives no matter what we do. But there is no need to admit more inconsistency into our intellectual beliefs than we have to.

In today’s intellectual atmosphere, it is widely regarded as morally superior to be a believer in agency, and to treat any discussion of determinism with disdain. I suggest there is more intellectual boldness, more sense of adventure, in short more EE in going beyond agency. Once we have broken the intellectual taboo on treating everything, including human will and human thought, as subject to exploration and explanation, a frontier opens up. Quiet your fears; we lose nothing morally or politically by doing so. Moral commitments and political action will go on whether we think they are explainable by IR chains (or some improved theory) or not. And as a matter of experience, it is entirely possible for you, as an individual, to be a participant in any form of social action while holding the belief, in one corner of your mind, that what you are doing right now is the working out of IR processes. This is one of the things that makes everything interesting to a sociologist.

APPENDIX The Philosophical Defense of Free Will

The predominant type of argument for free-will has been a defensive one. Necessitarian philosophies have made general claims, on logical, theological, and ontological grounds; libertarian philosophies have always been in the position of seeking an exception. There must be reasons supporting freedom of action, because it seems such an important part of the human condition, and the idea of lack of freedom is so repellant. In Hellenistic philosophy, the Megarian logician Diodorus Cronos and after him the Stoic school argued that every statement about the future is either true or false, and hence everything that will happen is already logically determined. Debaters stressed the point that this would leave the world to Fate, and undermine the motivation of the individual to do anything at all; to which the Stoic Chrysippus made the rejoinder that the actions of the individual are also part of this determined pattern.

We see here the general mode of argument: necessitarian arguments are put forward on grounds of logical consistency, and libertarians reply that they find the results of this reasoning unacceptable because it undermines deeply held values. When libertarian philosophers have tried to bolster this by positive arguments, they have led themselves into metaphysical difficulties. The Epicureans countered the Stoics by introducing into their atomistic cosmology a swerve of the atoms, which should allow for human will. But even aside from the difficulty of demonstrating how this consequence follows, their construction was seen as arbitrary special pleading. Similarly Descartes posited a willing substance operating in parallel to the body with its material causality; but the point of contact between the two substances remained mysterious and arbitrary.

The same kind of problems arose in theological formulations. The metaphysical argument centering on the attributes of a supreme being, leads with seeming inevitability to the power, omniscience, and foreknowledge of God, and these would appear to exclude human freewill. Against this, primarily moral arguments were set forth: that humans should be responsible for their own salvation, or that God should not be responsible for evil. How to safeguard these beliefs without undermining God's absolute power has always been a conundrum. The orthodox Muslim theologians, after an early period of challenge from one of the philosophical schools, opted for the omnipotence of God in every respect. Christianity usually attempted to walk a tightrope between both sides, typically retreating from the claims of consistent reason into the claims of faith; free will was upheld theologically as a sacred mystery.

Secular philosophers have tended to compromise by asserting that freedom can coexist with necessity. Plato argued that all men desire to do what is Good; though this would seem to imply that every action is determined by the Good, Plato interpreted freedom as action of just this sort. Acting under the thrall of the passions would be both bad and unfree. Aristotle argued in parallel fashion that man as the reasonable animal is free when he follows reason rather than appetites. Kant displayed the traditional themes of Christian theology by locating freewill both in the sphere of morals rather than of empirical causality, and in an epistemologically mysterious realm of the Ding-an-sich. This position proved unstable in philosophical metaphysics. Fichte almost immediately attempted to derive all existence from self-positing will. But Fichte's position too was unstable, and the more enduring form of his dialectic was that reformulated by Hegel, which expresses the usual metaphysical compromise in its most extreme form: the world is simultaneously the unfolding of reason and freedom, and freedom on the human level is identified with consciousness of necessity.

Most Western philosophers have felt it desirable to defend free-will, but at the cost of either metaphysical difficulties or of diluting the meaning of freedom. Spinoza is a rare instance of a philosopher who valued intellectual consistency highly enough to embrace complete determinacy. Spinoza responded to the metaphysical difficulties of Descartes' two substances by positing a single substance with thinking and material aspects in parallel, subject to absolute necessity. His argument was generally considered scandalous.

At best, libertarians have been able to counter deterministic philosophies by taking refuge in a skeptical attack on the possibility of any definite knowledge at all. Carneades in the skeptical Middle Academy argued against Stoic determinism on the grounds that not even the gods can have reliable knowledge of events. And twentieth century analytical philosophy, pursuing a skeptical tack, tended to poke holes in any definitive conceptions of causality. But skepticism cuts both ways, and post-Wittgensteinian philosophy (Ryle, Hampshire and others) has undermined the concept of "will" because it hypostatizes an entity -- "will" or an "intention" -- as a cause of subsequent actions. In actual usage, "will" or "intention" is merely a cultural category for interpreting an action in terms of its ends. In general, philosophical arguments are unable to uphold free will directly, but in attacking the implications of the opposite position, this strategy has tended to undermine free will as well.

Two patterns stand out: First, defenders of free-will have based their arguments heavily upon moral considerations to be upheld at all costs, even the destruction of philosophical coherence. And second, this concern for free-will is overwhelmingly a Western consideration. In fact, this is largely a Christian concern, although it is approached by some pre-Christian instances (notably the Epicureans). Plato, Aristotle and most other Greek philosophers were less concerned with human will than with issues of goodness and reason; Hellenistic debate was more focused on Fate and equanimity in the face of it. Freedom of the will was not much explicitly raised until the main tradition of Christianity was formalized in late antiquity; in fact, this is one of the features that differentiates the victorious Christian church from rival contenders such as Gnostic sects and Manicheans. Freedom of the will goes along with distinctively Christian doctrines of the soul and what humans must do for salvation.

Christianity did not end up monopolizing free-will doctrine until after the traditions of Mediterranean monotheism had turned several bends of their road. In the early intellectual history of Islam, a group of rationalistic theologians, the Mu'tazilites, argued for human responsibility and free will, and developed a philosophy of causality in an attempt to reconcile these with God's omnipotence (Fakhry, 1983). They were opposed by more popular schools of scriptural literalists, who declared only predestination compatible with God's power. The secular schools of rationalistic philosophy which emerged at this time were predominantly Neo-Platonist, continuing the typical Greek indifference to the free-will issue. The Mu'tazilites lasted about 200 years (800-1000 A.D.), whereafter predestination went unchallenged. The Sufi mysticism which became prominent after 1000, although opposed to scriptural orthodoxy, was oriented away from the self and towards absorption in God.

Thus Christianity is not the only locus of free-will doctrines. On the other hand, within Christianity the importance of free will has fluctuated. It seems to have been unimportant in early Christianity before doctrine was crystalized by the theological "Fathers" of the late 300s A.D. Again the early medieval period emphasized ritualism and contact with magical artifacts such as the bones of saints. But the Catholicism of the High Middle Ages (1000-1400) strongly focused upon free will, and went to great lengths to explore the implications of free will as applied to God -- as if projecting human free will to the highest ontological level. Although the Protestant Reformation initially stressed predestination, this emphasis was soon undermined and Protestantism became very "willful."

In sum: Islam and Christianity both explored the free-will and determinist sides of activist monotheism, but came out with contrasting emphases. Islam began with many of the same ingredients as Christianity. It was during the urban-based and politically centralized Abassid caliphate when the free-will faction existed, although even then it was never dominant. After the disintegration of the unified Islamic state around 950, the scriptural literalists and the Sufis came to dominate cultural space, and the doctrine of individual responsibility and free will disappeared. Social conditions appear to underlie both the period of similarity among Muslim and Christian doctrines, and the period in which they diverged, the one towards the free-will pole, the other towards determinism. (This is treated in greater depth in Collins, The Sociology of Philosophies, 1998, chapters 8-9.)

In contrast to the activist religions of the West, free-will is almost never a consideration within polytheistic or animistic religions; nor is it important within the Hindu salvation cults, nor in Buddhism, Taoism, or Confucianism. Buddhism, for example, builds upon the concept of karma, chains of action which constitute worldly causality; a willful self is one of those illusions which binds one to the world of name-and-form. Confucians argued at length about whether humans are fundamentally good or fundamentally evil, but not whether they could choose between them. Closest to an exception is the idealist metaphysics formulated around 1500 by the Neo-confucian Wang Yang-ming. Yet although Wang's doctrine conceives of the world as composed of will, in a fashion similar to Fichte, Wang's emphasis is not on freedom but on the identity of thought and action in a cosmos consisting of the collective thoughts of all persons. These doctrinal comparisons show that free-will is insisted upon only where there is a high value placed upon the conception of the individual self. And that happens chiefly in Western social institutions.